Enterprise Service Bus Specification¶

Introduction¶

TThe RailXplore APM (Asset Performance Monitoring) Enterprise Service Bus (ESB) allows you to retrieve near real-time information about your assets (devices) and their underlined generated events and alarms.

The main workflow includes three main steps, 1. subscribe, 2. get notified, 3. retrieve relevant information:

RailXplore APM Interface via Enterprise Service Bus module¶

- RailXplore APM exposes several Interfaces for external systems enabling to consume data such as alarms, events, and device information.

- The main exposed interface is the Enterprise Service Bus (ESB)

- The interface publishes predefined alarms, events, and device details to the ESB and via RailXplore Fusion to its consumers.

- RailXplore Fusion can remove a subscribed consumer ( e.g. if consumer becomes unauthorized)

- RailXplore Fusion can block temporarily a subscribed consumer ( e.g. if there is uncertainty about a consumer and its behavior, preventing a denial-of-service attack )

External Systems¶

- External systems can consume published data such as alarms, events, or device data once authorized.

- External systems are able to subscribe for particular events

- External systems are able to unsubscribe from particular events

- External systems are notified of an update for a subject

- The ESB sub-system can publish alarms, events, and device lists according to well defined formats

API Properties and Capabilities¶

- the main API is based on the Observer design pattern

- one-to-many relationship: many external systems may connect to one publisher, the ESB, but can consume many topics

- to subscribe and unsubscribe a consumer

- authorization: shall be set as a precondition from the upper system

- public methods for data retrieval that can be called to retrieve specific data type for continuous pulling or pushing of data

- the required parameters and types public constants, variables, and naming scheme

- To consume data from RailXplore APM, the related asset types need to be connected and once contractually agreed, can be activated for subscription in RailXplore Fusion.

Architectural Considerations¶

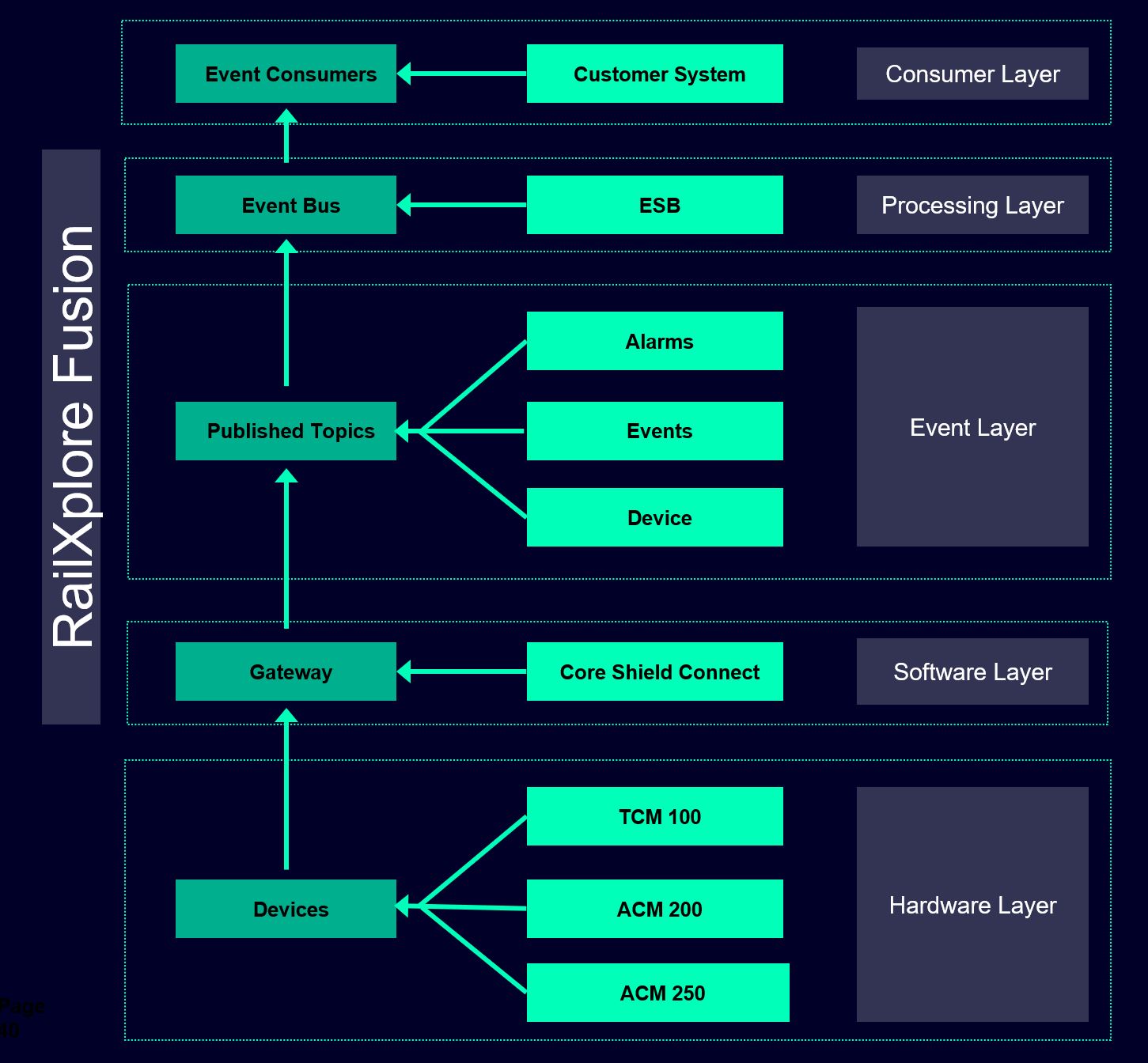

The following figure is a model for the fusion building block, illustrating the different cooperating layers (the producer and consumer of the events, alarms, and device list), and the related abstractions (generalization, such as topics, devices) and their underlined specializations (Concretization, such as concrete devices, concrete topics etc). This model can scale according to the needs, such ass adding more consumers, more event processing, additional concrete devices etc.{}

- Class Devices: a concrete implementation of the class device class be TCM 100, ACM 200, ACM 250. this class can be extended (scaled) to support additional devices, that may be of interest in the long tern.

- Class Gateway: the gateway is concretized with the RailXplore Core Shield Connect component. It can be replaced by other new gateways is required

- Class Published Topics: this class is concretized by the already well-defined topics such as Alarms, Events, Devices (including the information lists)

- Class Event Consumer: This class represents the subscribers or consumers of the subscription such as the customer systems (see more details below)

Architectural Design Pattern¶

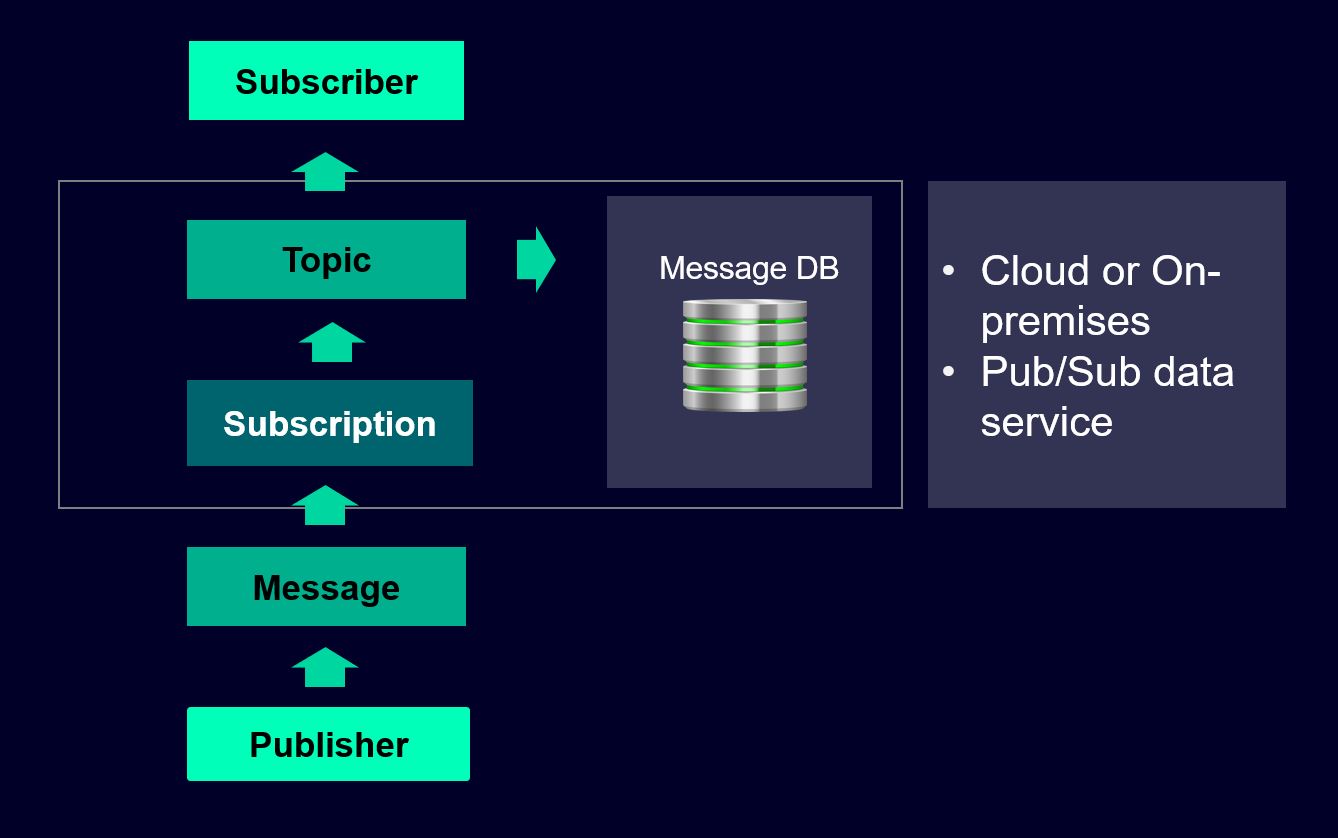

The model below is based on the Software Design Pattern Observer/Observable, and the underlined implementation known as Producer/Consumer, Publisher/Subscriber (short form: Pub/Sub).

The following describes main workflow, which may vary depending on the requirements of the publisher, the subscriber, the environment, and the end-points ( from where the event is generated, such as in the hardware layer, till its consumed by the subscriber (customer system)

- A publisher application creates a topic in the Cloud Pub/Sub or On-premises service and sends messages to the topic.

- A message contains the required data and the underlined attributes that describe the event (event, alarm., device list etc)

- Messages can be persisted in a message database until they are delivered or may be deleted after a well-define time-out (such as in Apache Kafka, a possible implementation of the Sub/Pub pattern within a real-time data stream environments).

- Messages may be acknowledged by the subscribers.

- The Pub/Sub service forwards messages from a topic to all of its subscriptions.

- Each subscription receives messages either by the Pub/Sub pushing them to the subscriber's define endpoint (e.g. customer system), or by the subscriber pulling them from the service.

- The subscriber receives pending messages from its subscription and may acknowledges each one to the Pub/Sub service.

API Reference¶

Data Types¶

| Device Type | Description |

|---|---|

| Alarms | APM detects various types of Alarms for track side assets. Each of it is published into ESB as a separate JSON message as soon as it is detected in APM |

| Events | APM application can identify certain events like port status change, board state change etc. These events are published as a separate JSON message as soon as it is detected in APM |

| Alarms List | List of alarms detected over a period is published as a JSON Array |

| Event List | List of events detected over a period is published as a JSON Array |

| Device List | List of active devices in APM |

Device Types¶

A customer (tenant) can subscribe for alarms, events and other device updates through the ESB for following device types:

- TCM100

- ACM200

- ACM250

Subscriptions¶

Based on the Data types and Device types, an administrator can create different subscriptions for a customer using the APM UI. Subscriptions can be Alarms, Events, Device List etc. An administrator can set up a subscription for a tenant during the installation time or it can be updated later using the ESB configuration screen.

The following are the available subscription types. Please refer user manual to get the detailed steps to enable and disable Subscriptions using APM UI.

| Subscription Name | Device Type | Subscription Type | Description |

|---|---|---|---|

| TCM100 Live Alarms | TCM100 | Alarms | By enabling this subscription, APM application publishes TCM100 alarms to respective Kafka topic |

| TCM100 Live Events | TCM100 | Events | By enabling this subscription, APM application publishes TCM100 events to respective Kafka topic as soon as it is identified in APM. |

| ACM200 Live Alarms | ACM200 | Alarms | By enabling this subscription, APM application publishes ACM200 alarms to respective Kafka topic as soon as it is identified in APM. |

| ACM200 Live Events | ACM200 | Events | By enabling this subscription, APM application publishes ACM200 events to respective Kafka topic as soon as it is identified in APM. |

| ACM250 Live Alarms | ACM250 | Alarms | By enabling this subscription, APM application publishes ACM250 alarms to respective Kafka topic as soon as it is identified in APM. |

| ACM250 Live Events | ACM250 | Events | By enabling this subscription, APM application publishes ACM250 events to respective Kafka topic as soon as it is identified in APM. |

| Periodic Active Alarms List | All | Alarms List | By enabling this subscription, APM application publishes all active alarms to a Kafka topic based on a schedule. Schedule is configurable, for example it can be run every 2 hours. |

| Periodic Device List | All | Device List | By enabling this subscription, APM application publishes all active devices to a Kafka topic based on a schedule. Schedule is configurable, for example it can be run every 2 hours. |

| Periodic Events List | All | Events List | By enabling this subscription, APM application publishes all events identified in APM to a Kafka topic during a time window. Time window is configurable, for example it can be run in last 2 hour. |

ESB Connectivity Configuration¶

The ESB is currently implemented using Apache Kafka with real-time data streaming capabilities.

Customers can pull published Alarms, Events, and messages from the ESB by establishing connectivity to Kafka.

Connectivity to the ESB service can be established from the customer applications with the preferred technology and programming language. However, we recommend to use NodeJS and the related dependencies to build a consumer application as shown below. In the following we describe few aspects of the required packages, configuration, and code snippets to illustrate the concept and how it works. In addition, a full client running example in Node.js will be delivered to the customers, which can be reused and extended to fulfill any specific requirements.

Packages to install¶

install the following packages:

Node.js and NPM (its package manager, available on its official website), for your specific operating system (WIndows, Linux, Mac, etc)

Kafka client library for Node.js, such as kafkajs. NPM can be used for the installation as well, in a shell or in a terminal window run the following command:

npm install kafkajs

Customer Application Configuration¶

Consumer application can be configured with the following details to establish connectivity to the ESB Kafka.

The consumer must be configured with at least one broker, in this case the ESB broker.

// set the credentials, the secret, and the port during the installation, this information is used by kafla to trace back the consumer

const ESBclientId = getClientId (setClientId());

const ESBclientSecret = getClientSecret (setClientSecret());

const ESBBrokerPort = getESBBrokerPort (setESBBrokerPor());

// Create broker list

const { ESBKafka } = require("kafkajs");

const ESBKafka = new Kafka({

clientId: ESBclientSecret,

brokers: [ESBkafka+":"+ESBBrokerPort],

});

SASL Mechanism¶

The sasl option can be used to configure the authentication mechanism.

const SASL_Mechanism = 'plain';

Kafka Schema Registry¶

Schema Registry offers a centralized location for the management and validation of schemas related to topic message data, facilitating the serialization and deserialization of the data transiting across the network. This ensure data consistency and compatibility compatibility.

Using Avro schema in Kafka ensure speed and consistency, and efficiency.

Consumers can retrieve the topic schema from the registry.

String ESBschemaRegistryUrl = getKafkaSchemaRegistryURL();

const ESBregistry = new SchemaRegistry(schemaRegistryUrl);

Enabling Authentication¶

this what's required to be done:

- Broker certificate is to be installed on each broker.

- Certificate is distributed to clients and installed in their truststore.

- Client certificate is installed on each client.

- Certificate is installed in each broker’s truststore.

ESBKafka Consumer Configuration¶

// the first part is similar to the initial configuratiom, done once, here is to show how the instrauctions are related to eah other, including creating the consumer application

const { ESBKafka } = require("kafkajs");

// for testing on the local machine, having installed kafka and all related dependencies. As a broker: localhost, is the equivalent of 127.0.0.1, port:9092, is standraf port for Kfaka, however, a differetn port can be selected

const ESBKafka = new Kafka({

clientId: "myCustomerID",

brokers: ["localhost:9092"],

});

const ESBClientConsumer = kafka.consumer({ groupId: "customerGroup" });

Connect and Consume Messages¶

The following is a general example how to connect and consume messages

conts ESBalarmTopic = "Alarms";

const run = async () => {

await ESBclientConsumer.connect();

await ESBclientConsumer.subscribe({ topic: ESBalarmTopic, fromBeginning: true });

await ESBclientConsumer.run({

eachMessage: async ({ ESBalarmTopic, partition, message }) => {

console.log({

value: message.value.toString(),

});

},

});

};